Let’s make sure we are using tensorflow v2+

Training and inference with colab#

Google colab is a free server for running notebooks with GPU/TPU support - this is a great way to use DAS if you do not have a computer with a GPU.

This notebook demonstrates

how to setup DAS

how to load your own datasets

how to train a network and then use that network to label a new audio recording.

Open and edit this notebook in colab by clicking this badge:

%tensorflow_version 2.x

Install DAS:

!pip install das

Collecting das

Downloading das-0.22.1-py3-none-any.whl (77 kB)

|████████████████████████████████| 77 kB 3.0 MB/s eta 0:00:011

?25hRequirement already satisfied: scipy in /usr/local/lib/python3.7/dist-packages (from das) (1.4.1)

Requirement already satisfied: numpy in /usr/local/lib/python3.7/dist-packages (from das) (1.19.5)

Collecting zarr

Downloading zarr-2.10.0-py3-none-any.whl (146 kB)

|████████████████████████████████| 146 kB 7.1 MB/s

?25hRequirement already satisfied: librosa in /usr/local/lib/python3.7/dist-packages (from das) (0.8.1)

Requirement already satisfied: pandas in /usr/local/lib/python3.7/dist-packages (from das) (1.1.5)

Collecting flammkuchen

Downloading flammkuchen-0.9.2-py2.py3-none-any.whl (16 kB)

Requirement already satisfied: matplotlib in /usr/local/lib/python3.7/dist-packages (from das) (3.2.2)

Requirement already satisfied: scikit-learn in /usr/local/lib/python3.7/dist-packages (from das) (0.22.2.post1)

Collecting peakutils

Downloading PeakUtils-1.3.3-py3-none-any.whl (7.7 kB)

Collecting defopt

Downloading defopt-6.1.0.tar.gz (35 kB)

Collecting matplotlib_scalebar

Downloading matplotlib_scalebar-0.7.2-py2.py3-none-any.whl (17 kB)

Requirement already satisfied: h5py in /usr/local/lib/python3.7/dist-packages (from das) (3.1.0)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.7/dist-packages (from das) (3.13)

Requirement already satisfied: docutils>=0.10 in /usr/local/lib/python3.7/dist-packages (from defopt->das) (0.17.1)

Collecting sphinxcontrib-napoleon>=0.7.0

Downloading sphinxcontrib_napoleon-0.7-py2.py3-none-any.whl (17 kB)

Requirement already satisfied: typing_extensions>=3.7.4 in /usr/local/lib/python3.7/dist-packages (from defopt->das) (3.7.4.3)

Collecting typing_inspect>=0.3.1

Downloading typing_inspect-0.7.1-py3-none-any.whl (8.4 kB)

Requirement already satisfied: six>=1.5.2 in /usr/local/lib/python3.7/dist-packages (from sphinxcontrib-napoleon>=0.7.0->defopt->das) (1.15.0)

Collecting pockets>=0.3

Downloading pockets-0.9.1-py2.py3-none-any.whl (26 kB)

Collecting mypy-extensions>=0.3.0

Downloading mypy_extensions-0.4.3-py2.py3-none-any.whl (4.5 kB)

Requirement already satisfied: tables in /usr/local/lib/python3.7/dist-packages (from flammkuchen->das) (3.4.4)

Requirement already satisfied: cached-property in /usr/local/lib/python3.7/dist-packages (from h5py->das) (1.5.2)

Requirement already satisfied: joblib>=0.14 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (1.0.1)

Requirement already satisfied: soundfile>=0.10.2 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (0.10.3.post1)

Requirement already satisfied: numba>=0.43.0 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (0.51.2)

Requirement already satisfied: decorator>=3.0.0 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (4.4.2)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (21.0)

Requirement already satisfied: pooch>=1.0 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (1.5.1)

Requirement already satisfied: resampy>=0.2.2 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (0.2.2)

Requirement already satisfied: audioread>=2.0.0 in /usr/local/lib/python3.7/dist-packages (from librosa->das) (2.1.9)

Requirement already satisfied: setuptools in /usr/local/lib/python3.7/dist-packages (from numba>=0.43.0->librosa->das) (57.4.0)

Requirement already satisfied: llvmlite<0.35,>=0.34.0.dev0 in /usr/local/lib/python3.7/dist-packages (from numba>=0.43.0->librosa->das) (0.34.0)

Requirement already satisfied: pyparsing>=2.0.2 in /usr/local/lib/python3.7/dist-packages (from packaging>=20.0->librosa->das) (2.4.7)

Requirement already satisfied: requests in /usr/local/lib/python3.7/dist-packages (from pooch>=1.0->librosa->das) (2.23.0)

Requirement already satisfied: appdirs in /usr/local/lib/python3.7/dist-packages (from pooch>=1.0->librosa->das) (1.4.4)

Requirement already satisfied: cffi>=1.0 in /usr/local/lib/python3.7/dist-packages (from soundfile>=0.10.2->librosa->das) (1.14.6)

Requirement already satisfied: pycparser in /usr/local/lib/python3.7/dist-packages (from cffi>=1.0->soundfile>=0.10.2->librosa->das) (2.20)

Requirement already satisfied: python-dateutil>=2.1 in /usr/local/lib/python3.7/dist-packages (from matplotlib->das) (2.8.2)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.7/dist-packages (from matplotlib->das) (0.10.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/local/lib/python3.7/dist-packages (from matplotlib->das) (1.3.2)

Requirement already satisfied: pytz>=2017.2 in /usr/local/lib/python3.7/dist-packages (from pandas->das) (2018.9)

Requirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.7/dist-packages (from requests->pooch>=1.0->librosa->das) (2.10)

Requirement already satisfied: chardet<4,>=3.0.2 in /usr/local/lib/python3.7/dist-packages (from requests->pooch>=1.0->librosa->das) (3.0.4)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.7/dist-packages (from requests->pooch>=1.0->librosa->das) (2021.5.30)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /usr/local/lib/python3.7/dist-packages (from requests->pooch>=1.0->librosa->das) (1.24.3)

Requirement already satisfied: numexpr>=2.5.2 in /usr/local/lib/python3.7/dist-packages (from tables->flammkuchen->das) (2.7.3)

Collecting numcodecs>=0.6.4

Downloading numcodecs-0.9.1-cp37-cp37m-manylinux2010_x86_64.whl (6.2 MB)

|████████████████████████████████| 6.2 MB 19.4 MB/s

?25hCollecting fasteners

Downloading fasteners-0.16.3-py2.py3-none-any.whl (28 kB)

Collecting asciitree

Downloading asciitree-0.3.3.tar.gz (4.0 kB)

Building wheels for collected packages: defopt, asciitree

Building wheel for defopt (setup.py) ... ?25l?25hdone

Created wheel for defopt: filename=defopt-6.1.0-py3-none-any.whl size=14367 sha256=e283684ef3edbd107a13b2d88a61e4e1738bc416d8d1fe07af4499c65761f01a

Stored in directory: /root/.cache/pip/wheels/8e/80/07/63d08d3ae3870730bdc3f4d639af4a141f50aa0da27e183912

Building wheel for asciitree (setup.py) ... ?25l?25hdone

Created wheel for asciitree: filename=asciitree-0.3.3-py3-none-any.whl size=5051 sha256=8b134871e2a3d88db6eeeb1d6938976d2892be448eff2ecc74944e9eca70293f

Stored in directory: /root/.cache/pip/wheels/12/1c/38/0def51e15add93bff3f4bf9c248b94db0839b980b8535e72a0

Successfully built defopt asciitree

Installing collected packages: pockets, mypy-extensions, typing-inspect, sphinxcontrib-napoleon, numcodecs, fasteners, asciitree, zarr, peakutils, matplotlib-scalebar, flammkuchen, defopt, das

Successfully installed asciitree-0.3.3 das-0.22.1 defopt-6.1.0 fasteners-0.16.3 flammkuchen-0.9.2 matplotlib-scalebar-0.7.2 mypy-extensions-0.4.3 numcodecs-0.9.1 peakutils-1.3.3 pockets-0.9.1 sphinxcontrib-napoleon-0.7 typing-inspect-0.7.1 zarr-2.10.0

Import all the things:

import das.train, das.predict, das.utils, das.npy_dir

import matplotlib.pyplot as plt

import flammkuchen

import logging

logging.basicConfig(level=logging.INFO)

Mount your google drive so you can access your own datasets - this will ask for authentication.

from google.colab import drive

drive.mount('/content/drive')

Mounted at /content/drive

Train the model#

Adjust the variable path_to_data to point to the dataset on your own google drive.

path_to_data = '/content/drive/MyDrive/Dmoj.wrigleyi.npy'

das.train.train(model_name='tcn',

data_dir=path_to_data,

save_dir='res',

nb_hist=1024,

kernel_size=32,

nb_filters=32,

ignore_boundaries=True,

verbose=2,

nb_conv=4,

learning_rate=0.0005,

use_separable=[True, True, False, False],

nb_epoch=1000)

INFO:root:Loading data from /content/drive/MyDrive/Dmoj.wrigleyi.npy.

INFO:root:Version of the data:

INFO:root: MD5 hash of /content/drive/MyDrive/Dmoj.wrigleyi.npy is

INFO:root: 5381c36663f3b7286b0a5c42c0e3e463

INFO:root:Parameters:

INFO:root:{'data_dir': '/content/drive/MyDrive/Dmoj.wrigleyi.npy', 'y_suffix': '', 'save_dir': 'res', 'save_prefix': '', 'model_name': 'tcn', 'nb_filters': 32, 'kernel_size': 32, 'nb_conv': 4, 'use_separable': [True, True, False, False], 'nb_hist': 1024, 'ignore_boundaries': True, 'batch_norm': True, 'nb_pre_conv': 0, 'pre_nb_dft': 64, 'pre_kernel_size': 3, 'pre_nb_filters': 16, 'pre_nb_conv': 2, 'nb_lstm_units': 0, 'verbose': 2, 'batch_size': 32, 'nb_epoch': 1000, 'learning_rate': 0.0005, 'reduce_lr': False, 'reduce_lr_patience': 5, 'fraction_data': None, 'seed': None, 'batch_level_subsampling': False, 'tensorboard': False, 'neptune_api_token': None, 'neptune_project': None, 'log_messages': False, 'nb_stacks': 2, 'with_y_hist': True, 'x_suffix': '', 'balance': False, 'version_data': True, 'sample_weight_mode': 'temporal', 'data_padding': 128, 'return_sequences': True, 'stride': 768, 'y_offset': 0, 'output_stride': 1, 'class_names': ['noise', 'pulse'], 'class_types': ['segment', 'event'], 'filename_endsample_test': [], 'filename_endsample_train': [], 'filename_endsample_val': [], 'filename_startsample_test': [], 'filename_startsample_train': [], 'filename_startsample_val': [], 'filename_train': [], 'filename_val': [], 'samplerate_x_Hz': 10000.0, 'samplerate_y_Hz': 10000.0, 'filename_test': [], 'data_hash': '5381c36663f3b7286b0a5c42c0e3e463', 'nb_freq': 16, 'nb_channels': 16, 'nb_classes': 2, 'first_sample_train': 0, 'last_sample_train': None, 'first_sample_val': 0, 'last_sample_val': None}

INFO:root:Preparing data

INFO:root:Training data:

INFO:root: AudioSequence with 47 batches each with 32 items.

Total of 1158000 samples with

each x=(16,) and

each y=(2,)

INFO:root:Validation data:

INFO:root: AudioSequence with 15 batches each with 32 items.

Total of 386000 samples with

each x=(16,) and

each y=(2,)

INFO:root:building network

/usr/local/lib/python3.7/dist-packages/keras/optimizer_v2/optimizer_v2.py:356: UserWarning: The `lr` argument is deprecated, use `learning_rate` instead.

"The `lr` argument is deprecated, use `learning_rate` instead.")

INFO:root:None

INFO:root:Will save to res/20210925_132436.

INFO:root:start training

Model: "TCN"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 1024, 16)] 0

__________________________________________________________________________________________________

conv1d (Conv1D) (None, 1024, 32) 544 input_1[0][0]

__________________________________________________________________________________________________

separable_conv1d (SeparableConv (None, 1024, 32) 8224 conv1d[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 1024, 32) 0 separable_conv1d[0][0]

__________________________________________________________________________________________________

lambda (Lambda) (None, 1024, 32) 0 activation[0][0]

__________________________________________________________________________________________________

spatial_dropout1d (SpatialDropo (None, 1024, 32) 0 lambda[0][0]

__________________________________________________________________________________________________

conv1d_1 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d[0][0]

__________________________________________________________________________________________________

add (Add) (None, 1024, 32) 0 conv1d[0][0]

conv1d_1[0][0]

__________________________________________________________________________________________________

separable_conv1d_1 (SeparableCo (None, 1024, 32) 8224 add[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 1024, 32) 0 separable_conv1d_1[0][0]

__________________________________________________________________________________________________

lambda_1 (Lambda) (None, 1024, 32) 0 activation_1[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_1 (SpatialDro (None, 1024, 32) 0 lambda_1[0][0]

__________________________________________________________________________________________________

conv1d_2 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_1[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 1024, 32) 0 add[0][0]

conv1d_2[0][0]

__________________________________________________________________________________________________

separable_conv1d_2 (SeparableCo (None, 1024, 32) 8224 add_1[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 1024, 32) 0 separable_conv1d_2[0][0]

__________________________________________________________________________________________________

lambda_2 (Lambda) (None, 1024, 32) 0 activation_2[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_2 (SpatialDro (None, 1024, 32) 0 lambda_2[0][0]

__________________________________________________________________________________________________

conv1d_3 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_2[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 1024, 32) 0 add_1[0][0]

conv1d_3[0][0]

__________________________________________________________________________________________________

separable_conv1d_3 (SeparableCo (None, 1024, 32) 8224 add_2[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 1024, 32) 0 separable_conv1d_3[0][0]

__________________________________________________________________________________________________

lambda_3 (Lambda) (None, 1024, 32) 0 activation_3[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_3 (SpatialDro (None, 1024, 32) 0 lambda_3[0][0]

__________________________________________________________________________________________________

conv1d_4 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_3[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 1024, 32) 0 add_2[0][0]

conv1d_4[0][0]

__________________________________________________________________________________________________

separable_conv1d_4 (SeparableCo (None, 1024, 32) 8224 add_3[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 1024, 32) 0 separable_conv1d_4[0][0]

__________________________________________________________________________________________________

lambda_4 (Lambda) (None, 1024, 32) 0 activation_4[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_4 (SpatialDro (None, 1024, 32) 0 lambda_4[0][0]

__________________________________________________________________________________________________

conv1d_5 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_4[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 1024, 32) 0 add_3[0][0]

conv1d_5[0][0]

__________________________________________________________________________________________________

separable_conv1d_5 (SeparableCo (None, 1024, 32) 8224 add_4[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 1024, 32) 0 separable_conv1d_5[0][0]

__________________________________________________________________________________________________

lambda_5 (Lambda) (None, 1024, 32) 0 activation_5[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_5 (SpatialDro (None, 1024, 32) 0 lambda_5[0][0]

__________________________________________________________________________________________________

conv1d_6 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_5[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 1024, 32) 0 add_4[0][0]

conv1d_6[0][0]

__________________________________________________________________________________________________

separable_conv1d_6 (SeparableCo (None, 1024, 32) 8224 add_5[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 1024, 32) 0 separable_conv1d_6[0][0]

__________________________________________________________________________________________________

lambda_6 (Lambda) (None, 1024, 32) 0 activation_6[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_6 (SpatialDro (None, 1024, 32) 0 lambda_6[0][0]

__________________________________________________________________________________________________

conv1d_7 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_6[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 1024, 32) 0 add_5[0][0]

conv1d_7[0][0]

__________________________________________________________________________________________________

separable_conv1d_7 (SeparableCo (None, 1024, 32) 8224 add_6[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 1024, 32) 0 separable_conv1d_7[0][0]

__________________________________________________________________________________________________

lambda_7 (Lambda) (None, 1024, 32) 0 activation_7[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_7 (SpatialDro (None, 1024, 32) 0 lambda_7[0][0]

__________________________________________________________________________________________________

conv1d_8 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_7[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 1024, 32) 0 add_6[0][0]

conv1d_8[0][0]

__________________________________________________________________________________________________

separable_conv1d_8 (SeparableCo (None, 1024, 32) 8224 add_7[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 1024, 32) 0 separable_conv1d_8[0][0]

__________________________________________________________________________________________________

lambda_8 (Lambda) (None, 1024, 32) 0 activation_8[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_8 (SpatialDro (None, 1024, 32) 0 lambda_8[0][0]

__________________________________________________________________________________________________

conv1d_9 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_8[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 1024, 32) 0 add_7[0][0]

conv1d_9[0][0]

__________________________________________________________________________________________________

separable_conv1d_9 (SeparableCo (None, 1024, 32) 8224 add_8[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 1024, 32) 0 separable_conv1d_9[0][0]

__________________________________________________________________________________________________

lambda_9 (Lambda) (None, 1024, 32) 0 activation_9[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_9 (SpatialDro (None, 1024, 32) 0 lambda_9[0][0]

__________________________________________________________________________________________________

conv1d_10 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_9[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 1024, 32) 0 add_8[0][0]

conv1d_10[0][0]

__________________________________________________________________________________________________

conv1d_11 (Conv1D) (None, 1024, 32) 32800 add_9[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 1024, 32) 0 conv1d_11[0][0]

__________________________________________________________________________________________________

lambda_10 (Lambda) (None, 1024, 32) 0 activation_10[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_10 (SpatialDr (None, 1024, 32) 0 lambda_10[0][0]

__________________________________________________________________________________________________

conv1d_12 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_10[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 1024, 32) 0 add_9[0][0]

conv1d_12[0][0]

__________________________________________________________________________________________________

conv1d_13 (Conv1D) (None, 1024, 32) 32800 add_10[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 1024, 32) 0 conv1d_13[0][0]

__________________________________________________________________________________________________

lambda_11 (Lambda) (None, 1024, 32) 0 activation_11[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_11 (SpatialDr (None, 1024, 32) 0 lambda_11[0][0]

__________________________________________________________________________________________________

conv1d_14 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_11[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 1024, 32) 0 add_10[0][0]

conv1d_14[0][0]

__________________________________________________________________________________________________

conv1d_15 (Conv1D) (None, 1024, 32) 32800 add_11[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 1024, 32) 0 conv1d_15[0][0]

__________________________________________________________________________________________________

lambda_12 (Lambda) (None, 1024, 32) 0 activation_12[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_12 (SpatialDr (None, 1024, 32) 0 lambda_12[0][0]

__________________________________________________________________________________________________

conv1d_16 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_12[0][0]

__________________________________________________________________________________________________

add_12 (Add) (None, 1024, 32) 0 add_11[0][0]

conv1d_16[0][0]

__________________________________________________________________________________________________

conv1d_17 (Conv1D) (None, 1024, 32) 32800 add_12[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 1024, 32) 0 conv1d_17[0][0]

__________________________________________________________________________________________________

lambda_13 (Lambda) (None, 1024, 32) 0 activation_13[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_13 (SpatialDr (None, 1024, 32) 0 lambda_13[0][0]

__________________________________________________________________________________________________

conv1d_18 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_13[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 1024, 32) 0 add_12[0][0]

conv1d_18[0][0]

__________________________________________________________________________________________________

conv1d_19 (Conv1D) (None, 1024, 32) 32800 add_13[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 1024, 32) 0 conv1d_19[0][0]

__________________________________________________________________________________________________

lambda_14 (Lambda) (None, 1024, 32) 0 activation_14[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_14 (SpatialDr (None, 1024, 32) 0 lambda_14[0][0]

__________________________________________________________________________________________________

conv1d_20 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_14[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 1024, 32) 0 add_13[0][0]

conv1d_20[0][0]

__________________________________________________________________________________________________

conv1d_21 (Conv1D) (None, 1024, 32) 32800 add_14[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 1024, 32) 0 conv1d_21[0][0]

__________________________________________________________________________________________________

lambda_15 (Lambda) (None, 1024, 32) 0 activation_15[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_15 (SpatialDr (None, 1024, 32) 0 lambda_15[0][0]

__________________________________________________________________________________________________

conv1d_22 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_15[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 1024, 32) 0 add_14[0][0]

conv1d_22[0][0]

__________________________________________________________________________________________________

conv1d_23 (Conv1D) (None, 1024, 32) 32800 add_15[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 1024, 32) 0 conv1d_23[0][0]

__________________________________________________________________________________________________

lambda_16 (Lambda) (None, 1024, 32) 0 activation_16[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_16 (SpatialDr (None, 1024, 32) 0 lambda_16[0][0]

__________________________________________________________________________________________________

conv1d_24 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_16[0][0]

__________________________________________________________________________________________________

add_16 (Add) (None, 1024, 32) 0 add_15[0][0]

conv1d_24[0][0]

__________________________________________________________________________________________________

conv1d_25 (Conv1D) (None, 1024, 32) 32800 add_16[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 1024, 32) 0 conv1d_25[0][0]

__________________________________________________________________________________________________

lambda_17 (Lambda) (None, 1024, 32) 0 activation_17[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_17 (SpatialDr (None, 1024, 32) 0 lambda_17[0][0]

__________________________________________________________________________________________________

conv1d_26 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_17[0][0]

__________________________________________________________________________________________________

add_17 (Add) (None, 1024, 32) 0 add_16[0][0]

conv1d_26[0][0]

__________________________________________________________________________________________________

conv1d_27 (Conv1D) (None, 1024, 32) 32800 add_17[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 1024, 32) 0 conv1d_27[0][0]

__________________________________________________________________________________________________

lambda_18 (Lambda) (None, 1024, 32) 0 activation_18[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_18 (SpatialDr (None, 1024, 32) 0 lambda_18[0][0]

__________________________________________________________________________________________________

conv1d_28 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_18[0][0]

__________________________________________________________________________________________________

add_18 (Add) (None, 1024, 32) 0 add_17[0][0]

conv1d_28[0][0]

__________________________________________________________________________________________________

conv1d_29 (Conv1D) (None, 1024, 32) 32800 add_18[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 1024, 32) 0 conv1d_29[0][0]

__________________________________________________________________________________________________

lambda_19 (Lambda) (None, 1024, 32) 0 activation_19[0][0]

__________________________________________________________________________________________________

spatial_dropout1d_19 (SpatialDr (None, 1024, 32) 0 lambda_19[0][0]

__________________________________________________________________________________________________

conv1d_30 (Conv1D) (None, 1024, 32) 1056 spatial_dropout1d_19[0][0]

__________________________________________________________________________________________________

add_20 (Add) (None, 1024, 32) 0 conv1d_1[0][0]

conv1d_2[0][0]

conv1d_3[0][0]

conv1d_4[0][0]

conv1d_5[0][0]

conv1d_6[0][0]

conv1d_7[0][0]

conv1d_8[0][0]

conv1d_9[0][0]

conv1d_10[0][0]

conv1d_12[0][0]

conv1d_14[0][0]

conv1d_16[0][0]

conv1d_18[0][0]

conv1d_20[0][0]

conv1d_22[0][0]

conv1d_24[0][0]

conv1d_26[0][0]

conv1d_28[0][0]

conv1d_30[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 1024, 32) 0 add_20[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 1024, 2) 66 activation_20[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 1024, 2) 0 dense[0][0]

==================================================================================================

Total params: 431,970

Trainable params: 431,970

Non-trainable params: 0

__________________________________________________________________________________________________

Epoch 1/1000

/usr/local/lib/python3.7/dist-packages/keras/engine/training.py:2470: UserWarning: `Model.state_updates` will be removed in a future version. This property should not be used in TensorFlow 2.0, as `updates` are applied automatically.

warnings.warn('`Model.state_updates` will be removed in a future version. '

Epoch 00001: val_loss improved from inf to 0.04569, saving model to res/20210925_132436_model.h5

/usr/local/lib/python3.7/dist-packages/keras/utils/generic_utils.py:497: CustomMaskWarning: Custom mask layers require a config and must override get_config. When loading, the custom mask layer must be passed to the custom_objects argument.

category=CustomMaskWarning)

47/47 - 68s - loss: 0.0796 - val_loss: 0.0457

Epoch 2/1000

Epoch 00002: val_loss improved from 0.04569 to 0.04298, saving model to res/20210925_132436_model.h5

47/47 - 21s - loss: 0.0333 - val_loss: 0.0430

Epoch 3/1000

Epoch 00003: val_loss improved from 0.04298 to 0.04224, saving model to res/20210925_132436_model.h5

47/47 - 21s - loss: 0.0325 - val_loss: 0.0422

Epoch 4/1000

Epoch 00004: val_loss improved from 0.04224 to 0.04108, saving model to res/20210925_132436_model.h5

47/47 - 21s - loss: 0.0317 - val_loss: 0.0411

Epoch 5/1000

Epoch 00005: val_loss did not improve from 0.04108

47/47 - 21s - loss: 0.0328 - val_loss: 0.0416

Epoch 6/1000

Epoch 00006: val_loss improved from 0.04108 to 0.04089, saving model to res/20210925_132436_model.h5

47/47 - 21s - loss: 0.0301 - val_loss: 0.0409

Epoch 7/1000

Epoch 00007: val_loss did not improve from 0.04089

47/47 - 21s - loss: 0.0303 - val_loss: 0.0410

Epoch 8/1000

Epoch 00008: val_loss did not improve from 0.04089

47/47 - 21s - loss: 0.0289 - val_loss: 0.0410

Epoch 9/1000

Epoch 00009: val_loss did not improve from 0.04089

47/47 - 21s - loss: 0.0302 - val_loss: 0.0413

Epoch 10/1000

Epoch 00010: val_loss improved from 0.04089 to 0.04059, saving model to res/20210925_132436_model.h5

47/47 - 21s - loss: 0.0283 - val_loss: 0.0406

Epoch 11/1000

Epoch 00011: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0269 - val_loss: 0.0409

Epoch 12/1000

Epoch 00012: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0279 - val_loss: 0.0410

Epoch 13/1000

Epoch 00013: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0278 - val_loss: 0.0410

Epoch 14/1000

Epoch 00014: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0267 - val_loss: 0.0417

Epoch 15/1000

Epoch 00015: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0267 - val_loss: 0.0409

Epoch 16/1000

Epoch 00016: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0265 - val_loss: 0.0408

Epoch 17/1000

Epoch 00017: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0266 - val_loss: 0.0415

Epoch 18/1000

Epoch 00018: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0271 - val_loss: 0.0424

Epoch 19/1000

Epoch 00019: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0287 - val_loss: 0.0429

Epoch 20/1000

Epoch 00020: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0268 - val_loss: 0.0412

Epoch 21/1000

Epoch 00021: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0272 - val_loss: 0.0423

Epoch 22/1000

Epoch 00022: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0276 - val_loss: 0.0417

Epoch 23/1000

Epoch 00023: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0268 - val_loss: 0.0417

Epoch 24/1000

Epoch 00024: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0271 - val_loss: 0.0418

Epoch 25/1000

Epoch 00025: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0269 - val_loss: 0.0421

Epoch 26/1000

Epoch 00026: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0258 - val_loss: 0.0420

Epoch 27/1000

Epoch 00027: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0259 - val_loss: 0.0421

Epoch 28/1000

Epoch 00028: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0256 - val_loss: 0.0424

Epoch 29/1000

Epoch 00029: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0281 - val_loss: 0.0438

Epoch 30/1000

Epoch 00030: val_loss did not improve from 0.04059

47/47 - 21s - loss: 0.0269 - val_loss: 0.0424

INFO:root:re-loading last best model

INFO:root:predicting

INFO:root:evaluating

INFO:root:[[354461 1856]

[ 1718 10605]]

INFO:root:{'noise': {'precision': 0.9951765825610157, 'recall': 0.9947911550669769, 'f1-score': 0.9949838314881768, 'support': 356317}, 'pulse': {'precision': 0.8510552925126394, 'recall': 0.8605858962914875, 'f1-score': 0.8557940606843125, 'support': 12323}, 'accuracy': 0.9903049045138889, 'macro avg': {'precision': 0.9231159375368276, 'recall': 0.9276885256792322, 'f1-score': 0.9253889460862447, 'support': 368640}, 'weighted avg': {'precision': 0.9903588561686921, 'recall': 0.9903049045138889, 'f1-score': 0.9903309572867444, 'support': 368640}}

INFO:root:saving to res/20210925_132436_results.h5.

/usr/local/lib/python3.7/dist-packages/tables/path.py:112: NaturalNameWarning: object name is not a valid Python identifier: 'f1-score'; it does not match the pattern ``^[a-zA-Z_][a-zA-Z0-9_]*$``; you will not be able to use natural naming to access this object; using ``getattr()`` will still work, though

NaturalNameWarning)

/usr/local/lib/python3.7/dist-packages/tables/path.py:112: NaturalNameWarning: object name is not a valid Python identifier: 'macro avg'; it does not match the pattern ``^[a-zA-Z_][a-zA-Z0-9_]*$``; you will not be able to use natural naming to access this object; using ``getattr()`` will still work, though

NaturalNameWarning)

/usr/local/lib/python3.7/dist-packages/tables/path.py:112: NaturalNameWarning: object name is not a valid Python identifier: 'weighted avg'; it does not match the pattern ``^[a-zA-Z_][a-zA-Z0-9_]*$``; you will not be able to use natural naming to access this object; using ``getattr()`` will still work, though

NaturalNameWarning)

INFO:root:DONE.

(<keras.engine.functional.Functional at 0x7f269f6ed290>,

{'balance': False,

'batch_level_subsampling': False,

'batch_norm': True,

'batch_size': 32,

'class_names': ['noise', 'pulse'],

'class_types': ['segment', 'event'],

'class_weights': None,

'data_dir': '/content/drive/MyDrive/Dmoj.wrigleyi.npy',

'data_hash': '5381c36663f3b7286b0a5c42c0e3e463',

'data_padding': 128,

'filename_endsample_test': [],

'filename_endsample_train': [],

'filename_endsample_val': [],

'filename_startsample_test': [],

'filename_startsample_train': [],

'filename_startsample_val': [],

'filename_test': [],

'filename_train': [],

'filename_val': [],

'first_sample_train': 0,

'first_sample_val': 0,

'fraction_data': None,

'ignore_boundaries': True,

'kernel_size': 32,

'last_sample_train': None,

'last_sample_val': None,

'learning_rate': 0.0005,

'log_messages': False,

'model_name': 'tcn',

'nb_channels': 16,

'nb_classes': 2,

'nb_conv': 4,

'nb_epoch': 1000,

'nb_filters': 32,

'nb_freq': 16,

'nb_hist': 1024,

'nb_lstm_units': 0,

'nb_pre_conv': 0,

'nb_stacks': 2,

'neptune_project': None,

'output_stride': 1,

'pre_kernel_size': 3,

'pre_nb_conv': 2,

'pre_nb_dft': 64,

'pre_nb_filters': 16,

'reduce_lr': False,

'reduce_lr_patience': 5,

'return_sequences': True,

'sample_weight_mode': 'temporal',

'samplerate_x_Hz': 10000.0,

'samplerate_y_Hz': 10000.0,

'save_dir': 'res',

'save_prefix': '',

'seed': None,

'stride': 768,

'tensorboard': False,

'use_separable': [True, True, False, False],

'verbose': 2,

'version_data': True,

'with_y_hist': True,

'x_suffix': '',

'y_offset': 0,

'y_suffix': ''})

Adjust the name to point to the results:

res_name = '/content/res/20210925_132436'

res = flammkuchen.load(f'{res_name}_results.h5')

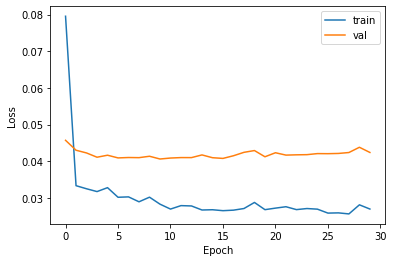

Inspect the history of the training and validation loss

plt.plot(res['fit_hist']['loss'], label='train')

plt.plot(res['fit_hist']['val_loss'], label='val')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.show()

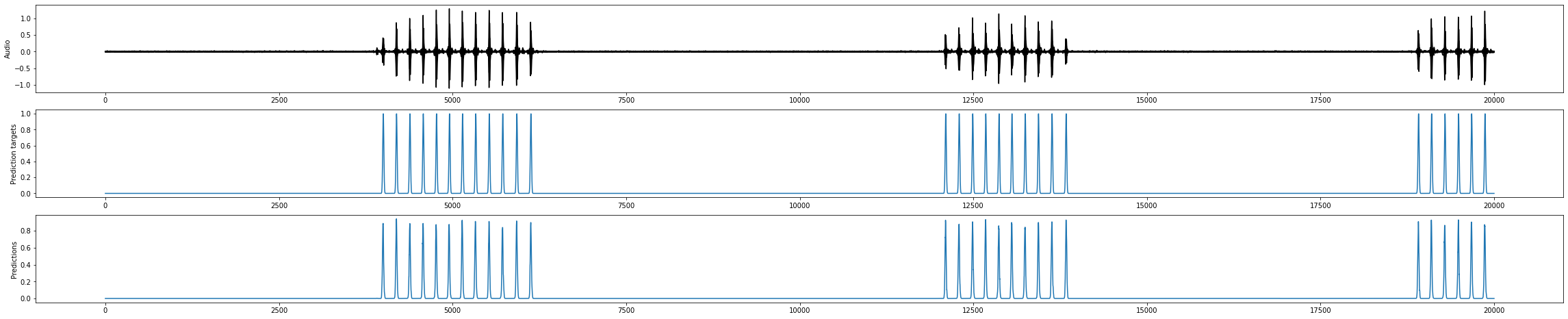

Plot the test results:

# t0, t1 = 1_020_000, 1_040_000 # dmel tutorial dataset

t0, t1 = 40_000, 60_000

plt.figure(figsize=(40, 8))

plt.subplot(311)

plt.plot(res['x_test'][t0:t1], 'k')

plt.ylabel('Audio')

plt.subplot(312)

plt.plot(res['y_test'][t0:t1, 1:])

plt.ylabel('Prediction targets')

plt.subplot(313)

plt.plot(res['y_pred'][t0:t1, 1:])

plt.ylabel('Predictions')

plt.show()

You can download the model results via the file tab on the left, from /contest/res

Predict on new data#

Load a new recording for prediction

model, params = das.utils.load_model_and_params(res_name) # load the model and runtime parameters

ds = das.npy_dir.load('/content/drive/MyDrive/Dmoj.wrigleyi.npy', memmap_dirs=['train','val']) # load the new data

print(ds)

x = ds['test']['x']

Data:

test:

x: (386000, 16)

y: (386000, 2)

val:

y: (386000, 2)

x: (386000, 16)

train:

y: (1158000, 2)

x: (1158000, 16)

Attributes:

class_names: ['noise', 'pulse']

class_types: ['segment', 'event']

filename_endsample_test: []

filename_endsample_train: []

filename_endsample_val: []

filename_startsample_test: []

filename_startsample_train: []

filename_startsample_val: []

filename_train: []

filename_val: []

samplerate_x_Hz: 10000.0

samplerate_y_Hz: 10000.0

filename_test: []

Run inference - this will calculate the confidence score and extract segment boundaries and event times.

events, segments, class_probabilities, _ = das.predict.predict(x, model=model, params=params, verbose=1)

/usr/local/lib/python3.7/dist-packages/keras/engine/training.py:2470: UserWarning: `Model.state_updates` will be removed in a future version. This property should not be used in TensorFlow 2.0, as `updates` are applied automatically.

warnings.warn('`Model.state_updates` will be removed in a future version. '

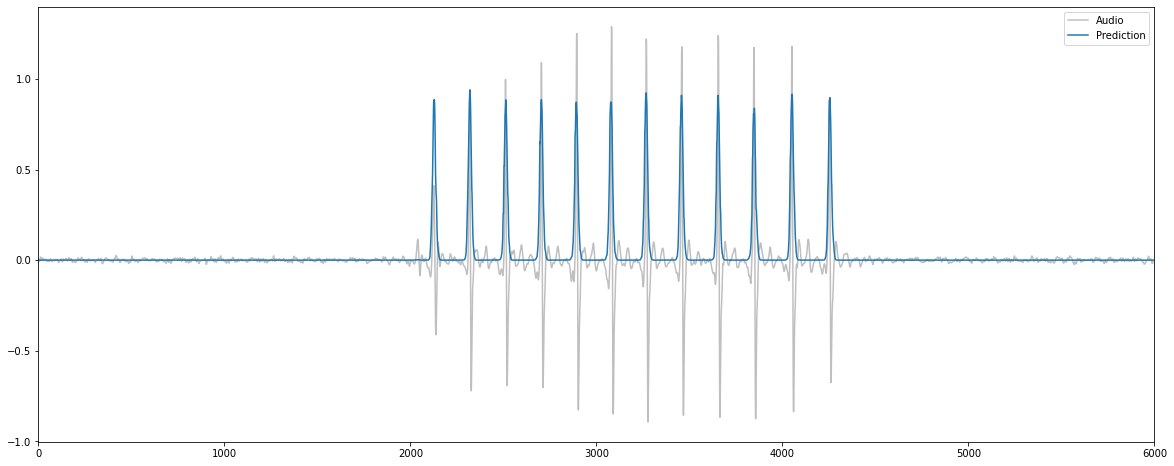

t0, t1 = 42_000, 48_000

plt.figure(figsize=(20, 8))

plt.plot(x[t0:t1, 0], alpha=0.25, c='k', label='Audio')

plt.plot(class_probabilities[t0:t1, 1:], label='Prediction')

plt.xlim(0, t1-t0)

plt.legend(['Audio', 'Prediction'])

plt.show()