Inferential statistics#

Often, we are not only interested in describing our data with descriptive statistics like the mean and standard deviation, but want to know whether two or more sets of measurements are likely to come from the same underlying distribution. We want to draw inferences from the data. This is what inferential statistics is about.

To learn how to do this in python, let’s use some example data:

To test whether a new wonder drug increases the eye sight, Linda and Anabel ran the following experiment with student subjects:

Experimental subjects were injected a saline solution containing 1nM of the wonder drug. Control subjects were injected saline without the drug. The drug is only effective for an hour or so. To assess the effect of the drug, eye sight was scored by testing the subjects’ ability to read small text within one hour of drug injection.

However, Linda and Anabel used two different experimental designs:

Linda tested each student on ten consecutive days and measured the performance after each experiment. She used 50 control (saline only) and 50 experimental subjects (saline+drug) - so 100 subjects in total, 10 measurements (score after injection) per subject.

Anabel only performed a single test per subject, but she measured the eye sight 30 minutes before and 30 minutes after the treatment. She tested 60 different subjects with two measurements each (score before and score after injection).

Our task is now to determine whether the wonder drug really improves eye sight as tested in these two sets of experiments.

Let’s start with the first dataset:

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import scipy

plt.style.use('ncb.mplstyle')

# load and explore the data

df1 = pd.read_csv('dat/5.03_inferential_stats_design1.csv') # Linda's data

display(df1)

| animal | treatment | score_after | |

|---|---|---|---|

| 0 | 0 | 0 | 10.053951 |

| 1 | 0 | 0 | 5.894092 |

| 2 | 0 | 0 | 13.447026 |

| 3 | 0 | 0 | 6.579613 |

| 4 | 0 | 0 | 8.482990 |

| ... | ... | ... | ... |

| 995 | 99 | 1 | 12.000260 |

| 996 | 99 | 1 | 12.277938 |

| 997 | 99 | 1 | 13.718489 |

| 998 | 99 | 1 | 14.272301 |

| 999 | 99 | 1 | 13.211777 |

1000 rows × 3 columns

What do we need to know about the data to choose the correct statistical tests?#

We want to determine whether the treatment improves of eye sight. What is our Null Hypothesis, what is our Alternative Hypothesis?

Null hypothesis:

Alternative hypothesis:

What does inferential statistics do? With some probability:

prove the Null?

disprove the Null?

prove the Alternative?

disprove the Alternative?

The p-value is a probability - probability of what?

What decisions do we need to make to select the correct test?

normally dist?

equal variance?

signed/unsigned?

paired/unpaired? independence?

Plot your data!#

Okay, but what is the first thing you do when you get a bunch of data? Plot it!! Why? Can’t we just look at summary statistics? No, they are not sufficient to fully describe the distribution and can be misleading!!

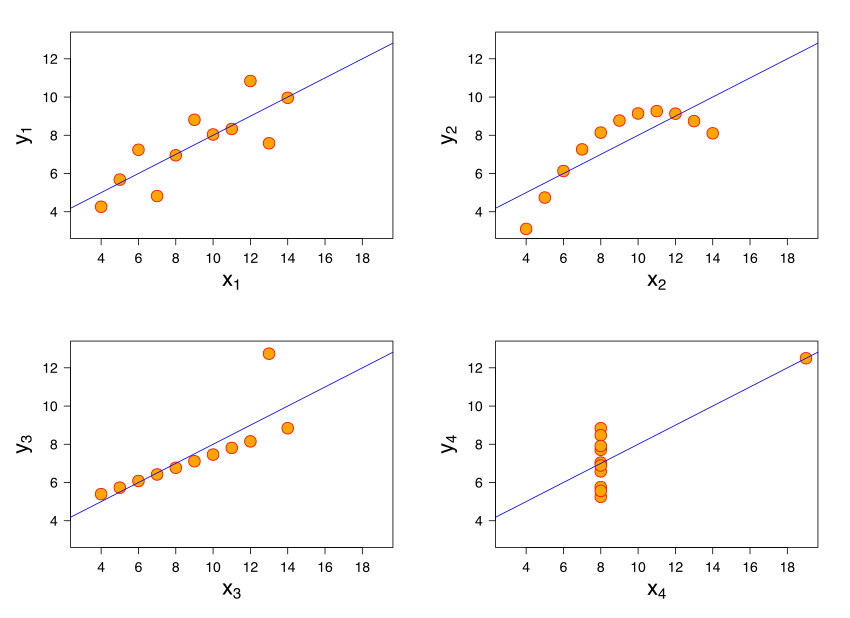

Anscombe’s quartet is a famous example that illustrates that fact. It shows 4 sets of data:

These four data sets are very different, but they have similar statistics:

Mean of x: 9

Sample variance of x: 11

Mean of y: 7.50

Sample variance of y: 4.125

Correlation between x and y: 0.816

Linear regression line: y = 3.00 + 0.500x

Coefficient of determination of the linear regression \(R^{2}\): 0.67

An even more extreme example - the data dinosaur:

Let’s start with analyzing the first dataset:

df1

| animal | treatment | score_after | |

|---|---|---|---|

| 0 | 0 | 0 | 10.053951 |

| 1 | 0 | 0 | 5.894092 |

| 2 | 0 | 0 | 13.447026 |

| 3 | 0 | 0 | 6.579613 |

| 4 | 0 | 0 | 8.482990 |

| ... | ... | ... | ... |

| 995 | 99 | 1 | 12.000260 |

| 996 | 99 | 1 | 12.277938 |

| 997 | 99 | 1 | 13.718489 |

| 998 | 99 | 1 | 14.272301 |

| 999 | 99 | 1 | 13.211777 |

1000 rows × 3 columns

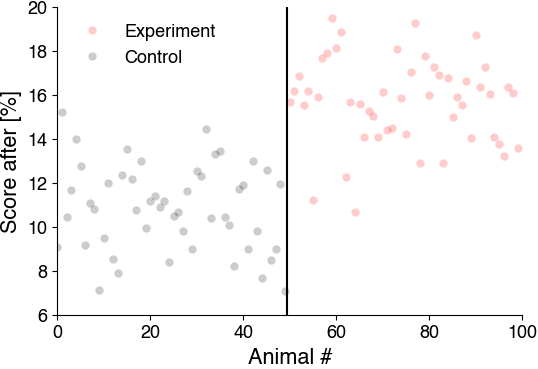

Let’s plot the data:

# Split DataFrame into treatment and control parts

experiment = df1[df1['treatment']==1]

control = df1[df1['treatment']==0]

# plot animal id (x-axis) vs. scores (y-axis)

plt.figure(figsize=(12, 4))

plt.plot(experiment['animal'], experiment['score_after'], 'or', alpha=0.2, label='Experiment')

plt.plot(control['animal'], control['score_after'], 'ok', alpha=0.2, label='Control')

plt.xlabel('Animal #')

plt.ylabel('Score after [%]')

plt.axvline(49.5, c='k')

plt.legend()

plt.show()

Are all samples independent? Are they paired or unpaired?#

# Data from design 1 - average the repeated tests for each animal

dict_grouped = {'animal': [], 'treatment': [], 'score_after': []} # initialize a dictionary with empty lists for holding the values

for animal in df1['animal'].unique(): # iterate over all animals

this_animal = df1[df1['animal']==animal] # get the part of the table for the current animal

dict_grouped['animal'].append(animal) # store the animal number

dict_grouped['treatment'].append(this_animal['treatment'].iloc[0]) # store the treament (0/1)

dict_grouped['score_after'].append(np.mean(this_animal['score_after'])) # compute and store the trial-averaged score for the current animal

# make DataFrame from dict

df1_avg = pd.DataFrame(dict_grouped)

df1_avg.to_csv('dat/5.03_inferential_stats_design1_clean.csv')

display(df1_avg)

print('N before averaging', df1.shape[0],', N after averaging', df1_avg.shape[0])

# Plot the aggregated data as a control

experiment = df1_avg[df1_avg['treatment']==1]

control = df1_avg[df1_avg['treatment']==0]

plt.figure(figsize=(6, 4))

plt.plot(experiment['animal'], experiment['score_after'], 'or', alpha=0.2, label='Experiment')

plt.plot(control['animal'], control['score_after'], 'ok', alpha=0.2, label='Control')

plt.xlabel('Animal #')

plt.ylabel('Score after [%]')

plt.axvline(49.5, c='k')

plt.legend()

plt.show()

| animal | treatment | score_after | |

|---|---|---|---|

| 0 | 0 | 0 | 9.097427 |

| 1 | 1 | 0 | 15.234215 |

| 2 | 2 | 0 | 10.425896 |

| 3 | 3 | 0 | 11.675589 |

| 4 | 4 | 0 | 13.972324 |

| ... | ... | ... | ... |

| 95 | 95 | 1 | 13.760092 |

| 96 | 96 | 1 | 13.203628 |

| 97 | 97 | 1 | 16.347525 |

| 98 | 98 | 1 | 16.062462 |

| 99 | 99 | 1 | 13.570623 |

100 rows × 3 columns

N before averaging 1000 , N after averaging 100

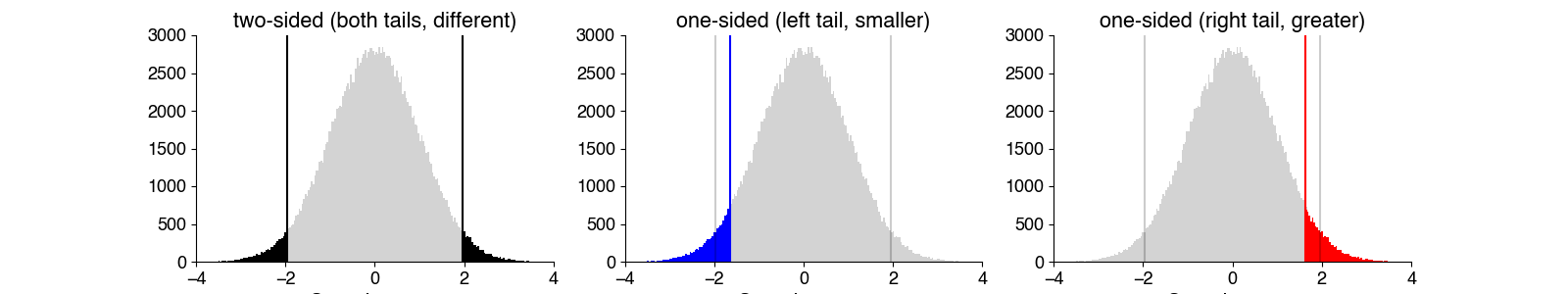

One-sided or two-sided?#

Is the data normally distributed?#

The t-test assumes that the data in the sample are normally distributed in the population. This means that the values within each group should follow a normal (Gaussian) distribution. This assumption is related to the parameters of the normal distribution, such as the mean and standard deviation.

To check for normality, we visualize the distributions, then run a statistical test. In scipy.stats, there are several tests for normality. We will use scipy.stats.normaltest, which derives a p-values based on the skew and kurtosis of the data.

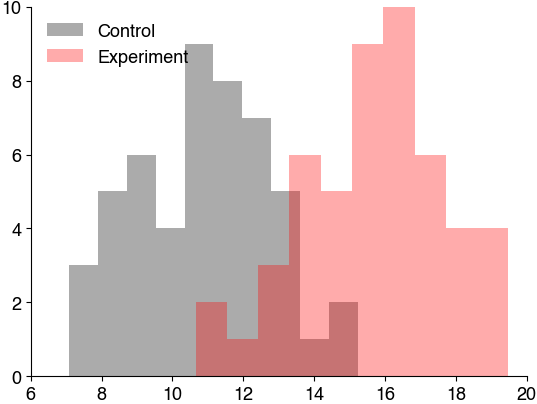

# histogram the data

# Data from design 1

experiment = df1_avg[df1_avg['treatment']==1]

control = df1_avg[df1_avg['treatment']==0]

plt.hist(control['score_after'], color='k', alpha=0.33, label='Control')

plt.hist(experiment['score_after'], color='r', alpha=0.33, label='Experiment')

plt.legend()

plt.show()

Mini exercise: Test for normality#

Do we run the test on the full dataset? Or on the individual groups (treatment and control) separately?

How do we interpret the p-values? What’s the null hypothesis when we test for normality?

# your solution here

print(scipy.stats.normaltest(df1_avg[df1_avg['treatment']==0]['score_after']))

print(scipy.stats.normaltest(df1_avg[df1_avg['treatment']==1]['score_after']))

NormaltestResult(statistic=0.977665116203375, pvalue=0.61334201754807)

NormaltestResult(statistic=1.3550283711604612, pvalue=0.5078779147507373)

If the p-value is less than the significance level, you may reject the null hypothesis and conclude that there is a statistically significant difference. This means:

With 95% probability, the sample does not originate from a normal distribution with the mean value μ0.

In 5% of cases, however, the significant difference may have been a result of chance, within the distribution of the null hypothesis.

Equal variance?#

The independent samples t-test (and its variants) assumes that the variances within the groups being compared are equal (homoscedasticity). In other words, it assumes that the spread or dispersion of the data is consistent across groups. This assumption is also related to population parameters.

However, homoscedasticity is not a hard criterion, since there exitss a variant of the t-test - Welch’s t-test - that accounts for unequal variance (heteroscedasticity).

Homoscedasticity is typically tested visually - by inspecting the data distributions - and rarely tested in practice. There do exist tests that compare the variance across multiple groups, like Levene test (scipy.stats.levene).

But, it’s best to use statistical tests that do not require equal variance, like Welch’s variant of the t-test.

Mini Exercise: Run the tests#

We now know all we need to know about our samples to select the correct test:

paired or unpaired: no

normal: yes

homoscedasticity: ?

one/two-sided: one

Check the docs to figure out how to use the correct test:

unpaired (independent):

paired (or related):

# your solution here

control = df1_avg[df1_avg['treatment']==0]

treatment = df1_avg[df1_avg['treatment']==1]

scipy.stats.ttest_ind(control['score_after'], treatment['score_after'], equal_var=False, alternative='less')

TtestResult(statistic=-12.363139612077164, pvalue=5.109456570496495e-22, df=97.9954921844401)

If you want to compare more than two groups#

First, detect group-level effects using

Anova:

scipy.stats.f_onewaynon-parameteric alternative: Kruskal-Wallis test

scipy.stats.kruskal

If p<0.05, there exist a difference between the groups.

To then detect which groups are different, you run a post hoc test:

scipy.stats.tukey_hsd or scipy.stats.dunnett

You will do this in one of the bonus exercises.